The Racialized Face: Facial Recognition Technology and Histories of Criminalization

2nd Place, People's Choice, Winter 2021

By: Jessica Castro, Emily Davidson, Sarah Isen

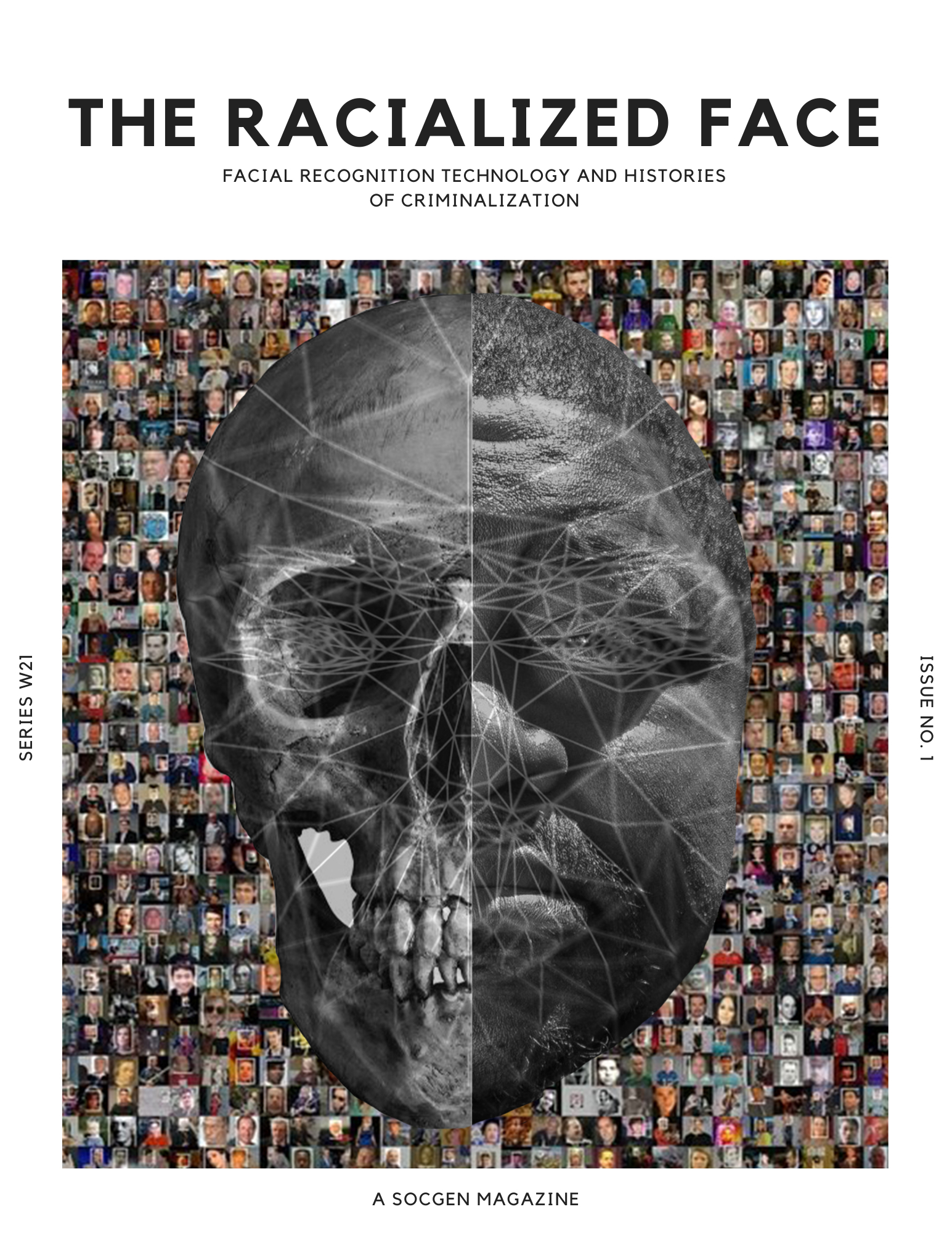

Is facial recognition technology really a scientific and cultural advancement, or will it only continue the historical tradition of criminalizing faces of color under the guise of scientific objectivity?

In January of 2020, Robert Julian-Borchak Williams was wrongfully accused by an algorithm. This event sparked conversation about one of law enforcement’s newest biometric advancements: facial recognition technology. What is dangerous about facial recognition technology is that it is much less accurate for people of color, women, and younger people. Yet, it is being used by law enforcement across the country to aid in arrests, convictions, and investigations. The algorithmic bias within facial recognition technology creates serious consequences for the health and liberty of the public, putting privacy, autonomy, and the right to a fair trial at extreme risk.

Within facial recognition technology, bias becomes invisibilized under the guise of technological objectivity. It presents a continuation of the historical tradition of quantifying physical form, especially for the evaluation of racial differences. We explore how this modern technology draws from “racialized face” pseudosciences that have been used to criminalize certain groups in the past.

Facial recognition technology poses a threat to civil liberties. It has the potential to be used to covertly surveil the public and track our movements and activities on an unprecedented level. Furthermore, it is biased. Justice is in order for people like Robert Julian-Borchak Williams who have been wrongfully accused of a crime they did not commit as a result of facial recognition technology. As technology advances, it has become increasingly clear that science cannot and does not exist outside of established sociocultural notions of race and identity. Facial recognition technology, just like all scientific advancements, must be interrogated for how it perpetuates the criminalization of marginalized groups before it can be ethically employed.

*This project won second place in The People’s choice award, for the in depth investigation of a technology that is more and more ubiquitous every day. The depth of knowledge, the breadth of perspectives, and the expert integration of all these issues makes this magazine a sophisticated and startling read." – Professor Kelty.

comments powered by Disqus